- Data parallelism

-

Data parallelism (also known as loop-level parallelism) is a form of parallelization of computing across multiple processors in parallel computing environments. Data parallelism focuses on distributing the data across different parallel computing nodes. It contrasts to task parallelism as another form of parallelism.

Contents

Description

In a multiprocessor system executing a single set of instructions (SIMD), data parallelism is achieved when each processor performs the same task on different pieces of distributed data. In some situations, a single execution thread controls operations on all pieces of data. In others, different threads control the operation, but they execute the same code.

For instance, consider a 2-processor system (CPUs A and B) in a parallel environment, and we wish to do a task on some data

d. It is possible to tell CPU A to do that task on one part ofdand CPU B on another part simultaneously, thereby reducing the duration of the execution. The data can be assigned using conditional statements as described below. As a specific example, consider adding two matrices. In a data parallel implementation, CPU A could add all elements from the top half of the matrices, while CPU B could add all elements from the bottom half of the matrices. Since the two processors work in parallel, the job of performing matrix addition would take one half the time of performing the same operation in serial using one CPU alone.Data parallelism emphasizes the distributed (parallelized) nature of the data, as opposed to the processing (task parallelism). Most real programs fall somewhere on a continuum between task parallelism and data parallelism.

Example

The program below expressed in pseudocode—which applies some arbitrary operation,

foo, on every element in the arrayd—illustrates data parallelism:[nb 1]define foo if CPU = "a" lower_limit := 1 upper_limit := round(d.length/2) else if CPU = "b" lower_limit := round(d.length/2) + 1 upper_limit := d.length for i from lower_limit to upper_limit by 1 foo(d[i])If the above example program is executed on a 2-processor system the runtime environment may execute it as follows:

- In an SPMD system, both CPUs will execute the code.

- In a parallel environment, both will have access to

d. - A mechanism is presumed to be in place whereby each CPU will create its own copy of

lower_limitandupper_limitthat is independent of the other. - The

ifclause differentiates between the CPUs. CPU"a"will read true on theif; and CPU"b"will read true on theelse if, thus having their own values oflower_limitandupper_limit. - Now, both CPUs execute

foo(d[i]), but since each CPU has different values of the limits, they operate on different parts ofdsimultaneously, thereby distributing the task among themselves. Obviously, this will be faster than doing it on a single CPU.

This concept can be generalized to any number of processors. However, when the number of processors increases, it may be helpful to restructure the program in a similar way (where

cpuidis an integer between 1 and the number of CPUs, and acts as a unique identifier for every CPU):for i from cpuid to d.length by number_of_cpus foo(d[i])

For example, on a 2-processor system CPU A (

cpuid1) will operate on odd entries and CPU B (cpuid2) will operate on even entries.JVM Example

Similar to the previous example, Data Parallelism is also possible using the Java Virtual Machine JVM (using Ateji PX, an extension of Java).

The code below illustrates Data parallelism on the JVM: Branches in a parallel composition can be quantified. This is used to perform the || operator[1] on all elements of an array or a collection:

[ // increment all array elements in parallel || (int i : N) array[i]++; ]

The equivalent sequential code would be:

[ // increment all array elements one after the other for(int i : N) array[i]++; ]

Quantification can introduce an arbitrary number of generators (iterators) and filters. Here is how we would update the upper left triangle of a matrix:

[ ||(int i:N, int j:N, if i+j<N) matrix[i][j]++; ]

Notes

- ^ Some input data (e.g. when

d.lengthevaluates to 1 androundrounds towards zero [this is just an example, there are no requirements on what type of rounding is used]) will lead tolower_limitbeing greater thanupper_limit, it's assumed that the loop will exit immediately (i.e. zero iterations will occur) when this happens.

References

- ^ http://www.ateji.com/px/patterns.html#data Data Parallelism using Ateji PX, an extension of Java

- Hillis, W. Daniel and Steele, Guy L., Data Parallel Algorithms Communications of the ACM December 1986

- Blelloch, Guy E, Vector Models for Data-Parallel Computing MIT Press 1990. ISBN 0-262-02313-X

See also

- Active message

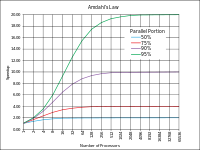

- Scalable Parallelism

Parallel computing General

Levels Threads Theory Elements Coordination Multiprocessing · Multithreading (computer architecture) · Memory coherency · Cache coherency · Cache invalidation · Barrier · Synchronization · Application checkpointingProgramming Hardware Multiprocessor (Symmetric · Asymmetric) · Memory (NUMA · COMA · distributed · shared · distributed shared) · SMT

MPP · Superscalar · Vector processor · Supercomputer · BeowulfAPIs Ateji PX · POSIX Threads · OpenMP · OpenHMPP · PVM · MPI · UPC · Intel Threading Building Blocks · Boost.Thread · Global Arrays · Charm++ · Cilk · Co-array Fortran · OpenCL · CUDA · Dryad · DryadLINQProblems Embarrassingly parallel · Grand Challenge · Software lockout · Scalability · Race conditions · Deadlock · Livelock · Deterministic algorithm · Parallel slowdown

Categories:

Wikimedia Foundation. 2010.